Data Fulfillment in Bobsled is the process of delivering a Data Product—or a customized version of it—from Sledhouse to a Data Consumer.

It’s the final step where your curated datasets leave Sledhouse and become available in the consumer’s platform, with the correct access entitlements automatically applied.

How it works

1. Start with a Data Product

Fulfillment always begins with a Data Product in Sledhouse—your managed, analytic-ready dataset.

You can choose between:

Standard Data Product: Built directly from a Sledhouse Table, representing the complete dataset or pre-packaged slice of it.

Customized Data Product: A version of a standard Data Product that’s filtered, transformed, or otherwise adapted for a specific consumer or business purpose.

Examples:

A global sales dataset as a standard Data Product.

A region-specific subset of that dataset as a customized Data Product.

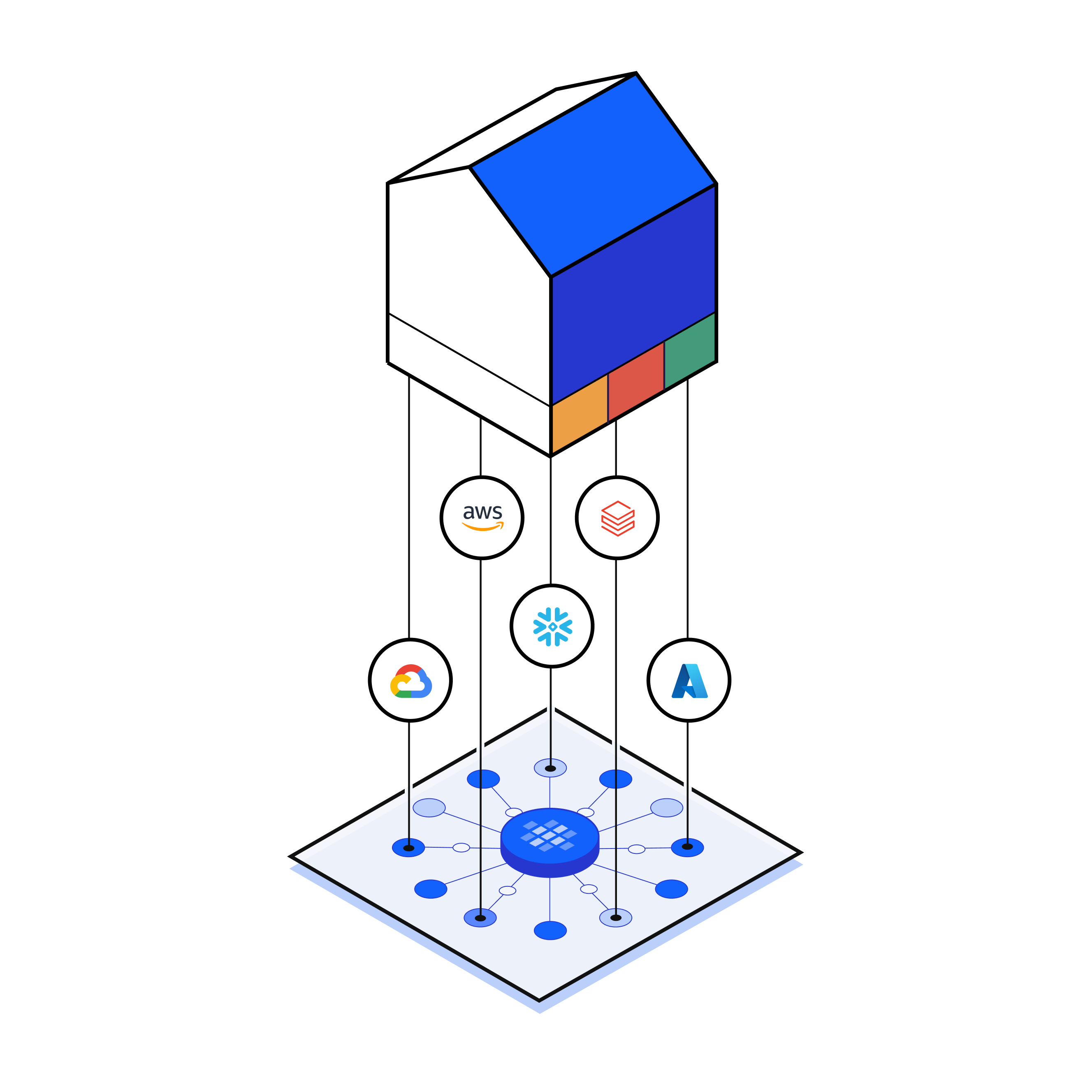

2. Add or Choose a Data Consumer

A Data Consumer is the receiving endpoint for your Data Product.

Common types include:

Cloud Data Warehouses (CDWs): Snowflake, Databricks, BigQuery

Cloud Storage: Amazon S3, Google Cloud Storage, Azure Blob

Each Data Consumer entry stores:

Destination location details

Required access credentials

Optional metadata to help track usage or ownership

Learn more about how Bobsled data sharing.

3. Bobsled Determines the Fulfillment Method

You don’t manually pick the delivery method—Bobsled automatically selects the most efficient and cost-effective approach based on the Data Product’s location and the destination type:

Zero Copy Sharing: Direct, egress-free sharing when both source and destination are in the same CDW environment.

Local Copy Fulfillment: In-region replica for performance and reduced egress costs.

Materialized Copy: Fresh export in the required file format, written to the destination (common for cloud storage endpoints).

NOTE:

Fulfillment methods are transparent to the user but affect performance, costs, and freshness.

4. Customize for the Consumer

Fulfillment is flexible—each consumer can get a tailored version of the same Data Product without altering the original:

Row-level filters: e.g., only orders from Europe

Column control: further refine which columns to fulfill

Custom joins or calculated fields: merge lookup data or compute derived metrics

5. Apply Entitlements & Access Control

Delivery is only part of fulfillment—Bobsled also manages entitlements:

Automatically grants the required access permissions on first delivery

Ensures ongoing access remains in sync with sharing policies

Revokes access automatically when the Data Product is unshared or the consumer is removed

For CDWs: Permissions are applied at the share, schema, or view level.

For Cloud Storage: Access keys, signed URLs, or IAM policies are updated as needed.

Fulfillment for different destination types

Bobsled adapts fulfillment depending on whether the destination is file storage or a cloud data warehouse:

Destination Type | Fulfillment Behavior |

|---|---|

Cloud Data Warehouse (CDW) | Data is shared as a table or view in the consumer’s warehouse. For compatible platforms (e.g., Snowflake-to-Snowflake), Bobsled uses zero copy sharing. For others, it uses a local copy depending on your settings. |

File Storage | Data is “unloaded” from the Sledhouse Table into files in the required format (Parquet, CSV, JSON, etc.) and placed in the consumer’s bucket or path. Structure and partitioning are preserved or optimized for downstream use. |

Why fulfillment matters?

Controlled access: Only entitled consumers get the data, and only for as long as allowed

Per-consumer customization: Each consumer can have a different shape of the same product

Operational efficiency: Configure once, let Bobsled handle scheduling and delivery

Auditability: Logs track who received what, when, and how

Ready to get started? Learn how to create and manage Data Consumers and fulfill Data Products.

NOTE:

Fulfillment is Sledhouse-specific—Bobsled Transfers deliver data directly from source to destination without a managed replica. Any customizations or entitlements in that case must be handled in the source system.