This article explains how to access data delivered to a Google Cloud Storage destination managed by Bobsled. The examples below demonstrate how to use a command-line interface (CLI) to browse, copy, or sync files from the Bobsled-managed bucket to a bucket you control.

While the CLI is used in these examples, data can also be accessed programmatically or integrated into production workflows using any GCS-compatible tool—provided the requesting account has been granted the appropriate access.

Prerequisites

In Bobsled Transfers, before consuming a data transfer, a data transfer must be sent to the destination, and access must be configured in Bobsled for the identity that is consuming the data. To learn how to configure access to the destination, please visit Configure a Google Cloud Storage Destination

In Bobsled Sledhouse, at least one Data Product must have been shared to the destination.

If you are accessing the data via the GCP command-line tool(s), you must install the CLI. Visit Installing the GCP CLI for more information.

If you are accessing the data from a share that has enabled the “Requester pays” feature, then the principal needs to have the

Service Usage Consumerrole, and the target project must have a billing account enabled.

Consuming a data transfer via a Share in Bobsled Transfers

From the Shares list page, click on the share that you would like to access.

Once a data transfer in the share has been completed, select the button Access Data.

Option 1: Accessing Data via Web Console

You can easily access the data using the web console link to view and download the data. To do so, please be sure to log in to the [insert cloud’s console name] with the account that has been configured to access the Bobsled share.

Select the Web console tab in the access dialog

Click the link icon to view the data in the GCP Web Console.

Option 2: Accessing Data via Command line

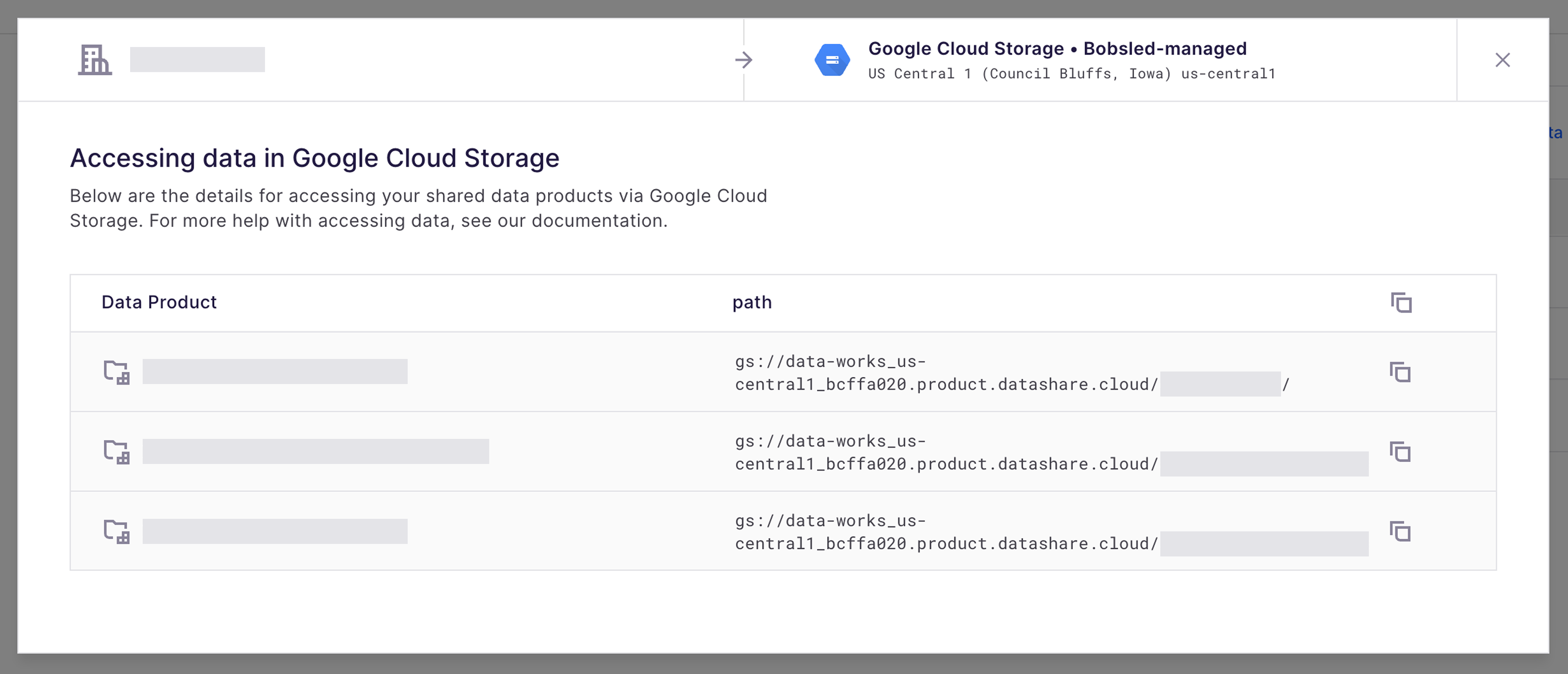

Using the Google Cloud Storage command-line tools (Gsutil or Gcloud), you can list, copy, and sync the contents of the data transfer in Google Cloud Storage. To use the following commands, you will need to copy the Cloud Storage URI located in the access data dialog as pictured above.

Log in to the GCP Command-line

Run the

logincommand.gcloud auth loginIf you would like to access the data as a service account, run the command:

gcloud auth activate-service-account [service-account-email] --key-file=[path-to-private-key-file]

Access via Gcloud command-line tool

NOTE:

To use the following commands, you will need to copy the Cloud Storage bucket URI located in the access dialog as pictured above.

List the contents. To list the data in the bucket, you will use the command gcloud ls ↗

gcloud storage ls -r <storage-bucket-URI> --billing-project=<YOUR_BILLING_PROJECT_ID>Parameters used:

-r(recursive) lists all objects in a bucketOptional parameters to use with the list command:

-l(info) additional information about the bucket (object size, creation time, etc)

Copy the contents to your bucket. To copy the data in the bucket, you will use the command gcloud cp ↗

gcloud storage cp -r <storage-bucket-URI> <your bucket/path> --billing-project=<YOUR_BILLING_PROJECT_ID>Parameters used: '

-r' (recursive) copies entire directory treeOptional parameters to use with the copy command: '

-n' (no-clobber) prevent overwriting the content of existing files at the destination.

NOTE:

The parameter --billing-project=<YOUR_BILLING_PROJECT_ID> is only required if Requester Pays was enabled during setup. If not, including it will result in an error. If you’re unsure, please contact your administrator or support.

Sync the contents. Please use gsutil to sync the contents of the data transfer to your bucket.

Access via Gsutil command-line tool

List the contents. To list the data in the bucket, you will use the command gsutil ls ↗

gsutil -u <YOUR_BILLING_PROJECT_ID> ls -r <storage-bucket-URI>Parameters used:

-r(recursive) lists all objects in a bucket and,-uuse the project for billing (Requester Pays) if enabled for the destinationOptional parameters to use with the list command:

-l(info) additional information about the bucket (object size, creation time, etc)

Copy the contents to your bucket. To copy the data in the bucket, you will use the command gsutil cp ↗

gsutil -u <YOUR_BILLING_PROJECT_ID> cp -r <storage-bucket-URI> <your-bucket/path>Parameters used: '

-r' (recursive) copies entire directory tree and,-uuse the project for billing (Requester Pays) if enabled for the bucketOptional parameters to use with the copy command: '

-n' (no-clobber) prevent overwriting the content of existing files at the destination

Sync the contents. Use sync if you would like to copy only files that are new or updated. Learn more about the command rsync ↗

gsutil -u <YOUR_BILLING_PROJECT_ID> rsync <storage-bucket-URI> <your-bucket/path>Parameters used: '

-r' (recursive) copies entire directory tree and,-uuse the project for billing (Requester Pays) if enabled for the bucket

NOTE:

The parameter -U <YOUR_BILLING_PROJECT_ID> is only required if Requester Pays was enabled during setup. If not, including it will result in an error. If you’re unsure, please contact your administrator or support.

Consuming a Data Product via Data Fulfillment in Sledhouse

From the Data Fulfillment list page, click on the Data Consumer whose that you would like to see the access details.

Once a data share has been completed, select the button Access Data.

Select the copy icon and send it to your data customer. Additionally, you can follow the steps relayed in Bobsled Transfers details.

NOTE:

• Using Bobsled Sledhouse Data Fulfillment functionality, Bobsled will provide the discrete paths for any individual Data Products rather than a single bucket.