.png)

Diagram representing File Storage sources (e.g., Amazon S3), and Table destinations (e.g., Databricks).

Bobsled offers data delivery from a file storage source to a data warehouse destination by turning the underlying files in the selected folders of the source into tables in the data warehouse destination.

Supported source file formats

Bobsled supports the current source file and compression formats:

Parquet (snappy)

CSV (gzip)

JSON (new-line delimited JSON) (gzip)

Avro

*Bobsled does not currently support .zip compression

Table Schemas

Bobsled extracts the schema from the underlying files and uses that to create the tables in the data warehouse destination. Using Bobsled data types as a mediator, it maps to standard warehouse destination data types, providing maximum flexibility.

For self-describing file formats, the schema from a random file will be used.

For file formats without a defined schema, Bobsled will auto-detect the schema.

CSV and JSON auto-inference

Bobsled will automatically infer the schema and types for each column in a CSV or JSON file. The type detection works by taking a sample of the data and attempting to convert the values in each column to the candidate types. If the conversion is unsuccessful, the candidate type is removed from that column's set of candidate types. After all samples have been handled, the candidate with the highest priority is chosen.

See CSV loading options to understand better the CSV format details required.

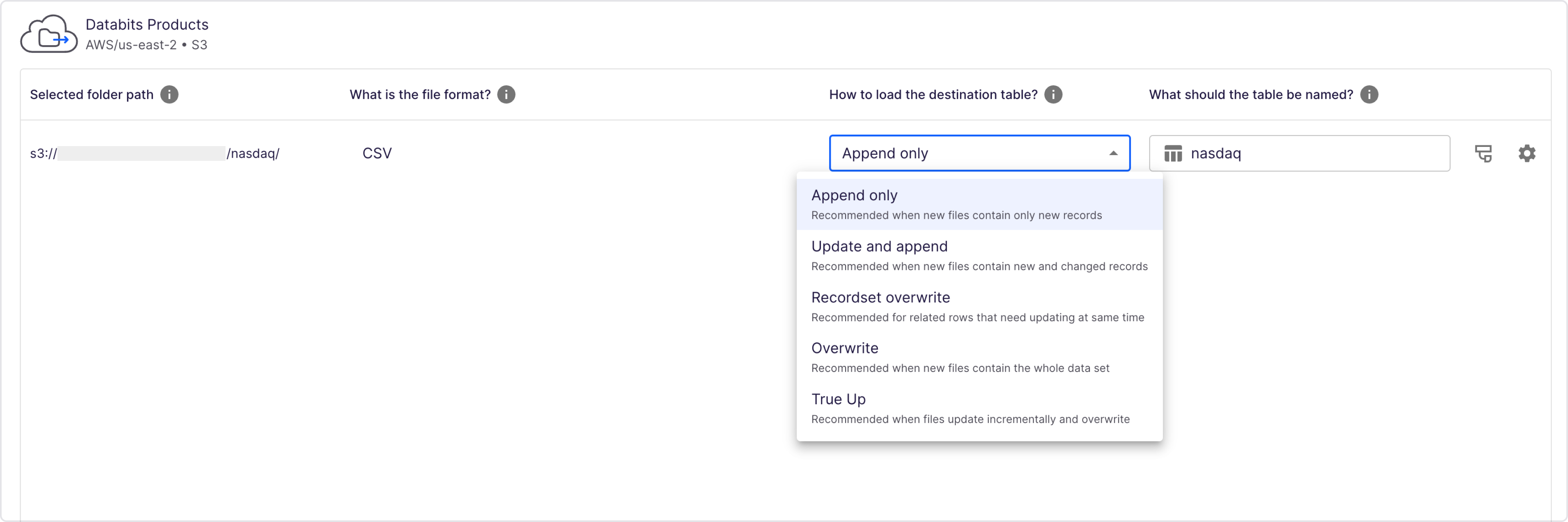

Table loading patterns

There are four different ways to load the folders into tables in the data warehouse. These options will load and transform the data into the warehouse to make sure that the data is ready for the consumer.

Append Only

Using the append only loading pattern, Bobsled will load all records in the file(s) in the underlying folder into the table in the data warehouse destination. This is the most basic loading option that matches the folder contents with the table in the data warehouse destination.

This pattern is well-suited for cases where new files in the underlying folder contain new records. A few examples of a good fit for the append only loading patterns are:

Event data or,

Market price data where new data will be logged.

NOTE:

When configuring a transfer through the application, the replication pattern to ‘append only’ is set by default.

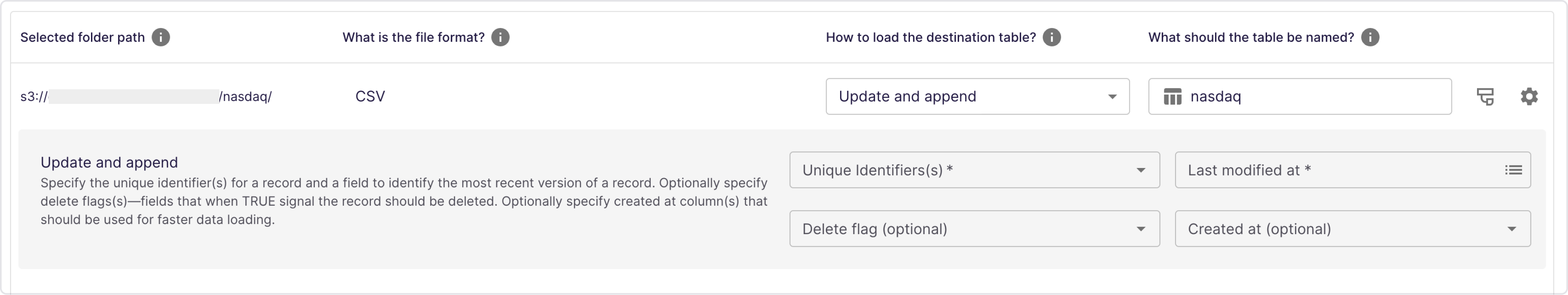

Update and append

Using the update and append loading pattern, Bobsled will ensure that only the latest version of each record will be available in the destination table. This process is executed by providing a key for Bobsled to identify each record’s latest state.

This loading pattern can optionally be configured to delete rows that are marked for deletion with a delete flag column. This column must be a Boolean data type, and the value should be set to True for records to be deleted.

This pattern is well-suited for datasets where records evolve or move through various states. A few examples of a good fit are datasets of orders that include changes to order status or company reference information, where details can change.

This pattern is transactionally managed, for each automation, all new data is promoted simultaneously regardless of whether the records were newly appended or updated.

When using update and append, you will be required to provide Bobsled with two values in your dataset: a unique identifier and a last modified at field.

Unique identifier: A key or composite key that should be unique and distinctive for each record that will be updated.Last modified at: A key or composite key that Bobsled will use to identify the most recent version of each record. If your dataset does not have a last modified value for Bobsled to identify, consider using a different loading pattern.Delete flag (optional): A Boolean key that Bobsled will use to determine if a given row should be deleted from the destination table. Bobsled will make sure any column marked for deletion is not added and remove any row with the same unique identifier for new records marked for deletion.Created at (optional): A key or composite key that Bobsled will use to filter down theMERGEstatement run in the destination data warehouse to improve the performance of the load by pruning files containing historical records. This should be used in cases where the table is large or updates very frequently and when yourcreated_atfield doesn’t change and is not nullable. Nulls in the source data can lead to duplicates. When using the created at key, it is suggested to cluster the table by that field for optimal loading performance and efficiency.

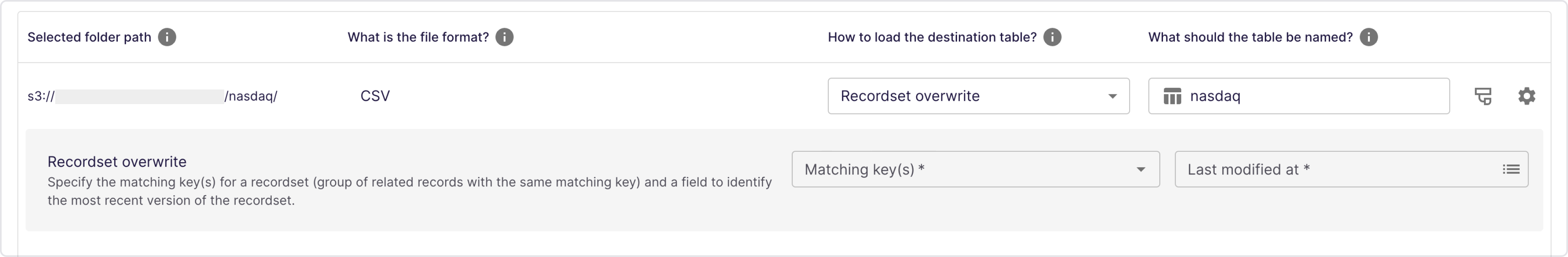

Recordset Overwrite

Using the recordset overwrite loading pattern, Bobsled will ensure that only the latest version of a given set of related records (recordset) is present. This pattern is well-suited for datasets where one event or record is stored on multiple rows and all rows need inserting, updating, or deleting at the same time. Bobsled ensures that only the latest version of the recordset is present and queryable.

Use this pattern when:

Your data has multiple related rows that should be treated as a unit

You need all related records to be updated together

Records in a set may be added, modified, or removed over time

This pattern is transactionally managed, for each automation run all new data is promoted simultaneously regardless of whether the records were newly added, updated, or deleted.

When using recordset overwrite, you will be required to provide Bobsled with two values in your dataset: a matching key(s) and a last modified at field(s).

Matching key: A key or composite key that is used to relate all records within the same recordsetLast modified at: A key or composite key that Bobsled will use to identify the most recent version of the recordset. If your dataset does not have a last modified value for Bobsled to identify, consider using a different loading pattern.

With Recordset Overwrite, Bobsled:

Identifies records belonging to the same set (using

order_idas the matching key)Determines the latest version (using

last_modified_at)Replaces all old records with the new version

NOTE:

If multiple rows are duplicates with the same matching key(s) and last modified at key(s) all such rows will be present in the destination table.

SQL for Recordset Overwrite (Pseudo SQL)

CREATE OR REPLACE VIEW final_recordset_overwrite AS

SELECT * FROM staging_recordset_overwrite

QUALIFY RANK() OVER (

PARTITION BY matching_key -- Matching key

ORDER BY last_modified_timestamp DESC NULLS LAST -- Last modified timestamp

) = 1;Overwrite

Using the overwrite loading pattern, Bobsled will take the new file(s) in an underlying folder and overwrite the existing data by truncating the table in the data warehouse destination, then loading all records in the latest file(s).

This pattern is well-suited for folders where new files written represent the latest complete data set. This is a common approach when a large portion of records in the table are altered and overwriting may be more efficient than updating, inserting, and deleting records in the table; or when the data set is sufficiently small enough that reloading the table is not an expensive operation.

Tables are transactionally updated; there will never be an empty or incomplete data warehouse destination table during a Bobsled load.

Three methods for identifying the new complete set of data:

.png)

Completion file. A good option for larger tables that write data in multi-part files.To ensure Bobsled can identify the right files that represent the latest version of the table, there are a few requirements for how the data must be structured in the source folders:

Each set of files representing a version of the table must be in their folder.

A

Completion filemust be written to identify when the complete set of data has been written to the folder (e.g. a file named_SUCCESS). It is important to write the completion file after the full set of data has been written so Bobsled moves the full set of data for the given table.As new versions are written to object storage with new completion meta files, Bobsled will continue to update the table with the latest set of data as identified by the latest completion meta file.

NOTE:

If When using overwrite, you will be required to provide Bobsled with the name of your meta file that your pipeline uses. By default, Bobsled uses the name _SUCCESS.

.png)

Latest file. A good option for smaller tables where data is written into a single file. The latest file method will find the latest, non-hash, file in the folder or sub-folders associated with a given table and consider the full state of the table..png)

Entire folder. This mode mirrors all contents from the selected source folder to the destination table. It is ideal for scenarios where you need to maintain complete synchronization between source and destination, ensuring that the destination table exactly matches the contents of all files in the source folder.

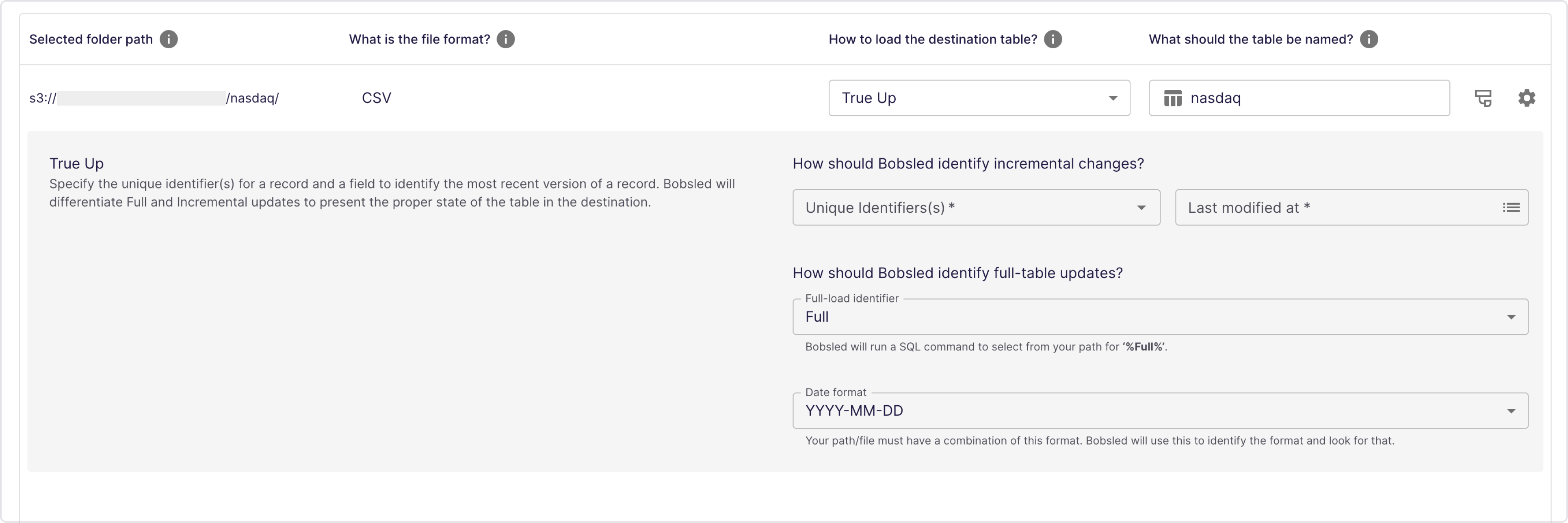

True up

The true-up loading pattern is used when there are files representing daily incremental updates, as well as weekly or monthly full overwrite files that reset the table. Bobsled ensures that the most recent version of each record is present and can be queried. This pattern is suitable for datasets where daily incremental update and append style updates need to be combined with periodic full overwrite updates.

Bobsled requires two pieces of metadata in the files to identify whether they represent "full" overwrite data or incremental updates, and to determine the appropriate date to use for each file. This data is configured by the account team for each customer.

To use the true-up loading pattern, you need to configure four values in your dataset:

Unique identifier: A key or composite key that should be unique and distinct for each record that will be updated.Last modified at: A key or composite key that Bobsled will use to identify the most recent version of each record. If your dataset does not have a last modified value that Bobsled can use, consider using a different loading pattern.Full load identifier: A string to match to identify which files/folders represent a full copy of the data (e.g. "Full")Date format: Format used in key names to represent the date associated with each file.

For tables that have two source folders, both folders should be configured to send to the same table name and have matching values for unique identifier and last modified at fields.

Change data capture (CDC)

Bobsled will process incremental data changes from your CDC stream and apply insert, update, and delete operations transactionally to your data warehouse. This pattern handles out-of-order events and maintains data consistency by tracking the most recent version of each record using timestamps and primary keys.

This method is well-suited for real-time data synchronization from transactional databases, event-driven architectures that capture change operations, and systems using CDC technologies like Debezium, AWS DMS, or Databricks. With each automation, all CDC events are processed transactionally while maintaining the latest state of records.

You will be required to provide Bobsled with three values in your dataset: a primary key, last modified timestamp, and change type column.

Primary Key: One or more columns that form a unique identifier for each record, used to match records between source and destination for updates and deletes.Last Modified At: A timestamp or datetime column which tracks when changes occur in the source system, used to determine the most recent version of each record and handle out-of-order events.Change Type: A column indicating the CDC operation type with values "I"/"INSERT" for inserts, "U"/"UPDATE" for updates, and "D"/"DELETE" for deletes.Note - Bobsled ignores I or U operation types and will update or insert as required by the state of the table while making sure to reflect the latest state of each record by primary key

Created At(optional): A column used to optimize MERGE performance by filtering historical records, improving load performance for large tables.This column cannot be nullable.

We recommend also clustering the table by the same column.

NOTE: The Change Type column is excluded from the destination table schema and used only for processing logic. Ensure your CDC system generates distinct timestamps for the same primary key when possible, and verify that timestamps have sufficient precision for high-frequency updates.

Updating files in source bucket

When a file in the source bucket is updated, Bobsled will load the updated data into the destination data warehouse table. This behavior applies to all loading patterns except for the append only pattern. In that case, newly updated files are not moved or loaded into the data warehouse. If data corrections are needed, either create new files with the corrected data or choose a loading pattern that supports file updates.

Records in updated files are treated in the same manner as records in new files and are subject to the loading behavior specified by the loading pattern and user configuration.

Hive partitions

Bobsled can identify Hive partitions and load the data encoded in the folder into the destination table. By default, the data type for Hive partitions is STRING, however, this can be overridden similarly to other data types.

CSV loading options

Bobsled uses CSV options for each data warehouse to effectively load data from CSV files. Below are the options used for each warehouse:

Snowflake ↗

Option Name | Setting | Definition |

|---|---|---|

|

| Specifies the character set of the source data. |

|

| Specifies the compression algorithm used for data files. |

|

| Defines the encoding format for binary string values in the data files |

| Auto-detected by Bobsled | Defines the character or string used to separate fields (columns) within each row (line) in the data file. |

|

| Specifies the escape character used for enclosed field values. |

|

| Specifies the escape character used for unenclosed field values. |

|

| One or more characters that separate records in an input file. There is no option to set a custom record delimiter. |

|

| If set to FALSE, an error is not generated, and the load continues. |

|

| Specifies the strings that represent NULL values. |

|

| When loading data, specifies whether to insert SQL NULL for empty fields in an input file. |

|

| Character used to enclose strings. |

|

| Skips the header row containing column names. |

|

| Defines the format of timestamp values in the data files. |

|

| Defines the format of time string values in the data files. |

|

| Defines the format of date values in the data files. |

|

| Use the Unicode replacement character (�) to replace all invalid UTF-8 characters during loading. |

BigQuery ↗

Option Name | Setting | Description |

|---|---|---|

|

| The character encoding of the data |

| Auto-detected by Bobsled | Defines the character or string used to separate fields (columns) within each row (line) in the data file |

|

| The value that is used to quote data sections in a CSV file |

|

| Skips the header row containing column names |

|

| Specifies a string that represents a null value |

|

| When set to true, rows with extra column values that do not match the table schema are ignored and are not loaded. |

|

| Accept rows that are missing trailing optional columns. The missing values are treated as nulls. |

|

| Allow new line characters in the data if quoted |

Databricks ↗

Option Name | Setting | Description |

|---|---|---|

|

| The name of the encoding CSV files. |

| Auto-detected by Bobsled | The separator string between columns. |

|

| The escape character to use when parsing the data. |

|

| Nulls are inserted for fields that cannot be parsed correctly. |

| Default: | A string between two consecutive CSV records. |

|

| String representation of a null value. |

|

| The character is used for escaping values where the field delimiter is part of the value. |

|

| Skips the header row containing column names |

| Default: | The format for parsing timestamp strings. |

| default: | The format for parsing date strings. |