.png) Diagram representing Table sources (e.g., Snowflake), and Table destinations (e.g., Databricks).

Diagram representing Table sources (e.g., Snowflake), and Table destinations (e.g., Databricks).

To deliver data from a data warehouse (source tables) to a data warehouse destination (destination tables), Bobsled seeks to mirror the source tables, prioritizing correctness and efficiency to the data warehouse destination.

Table replication patterns

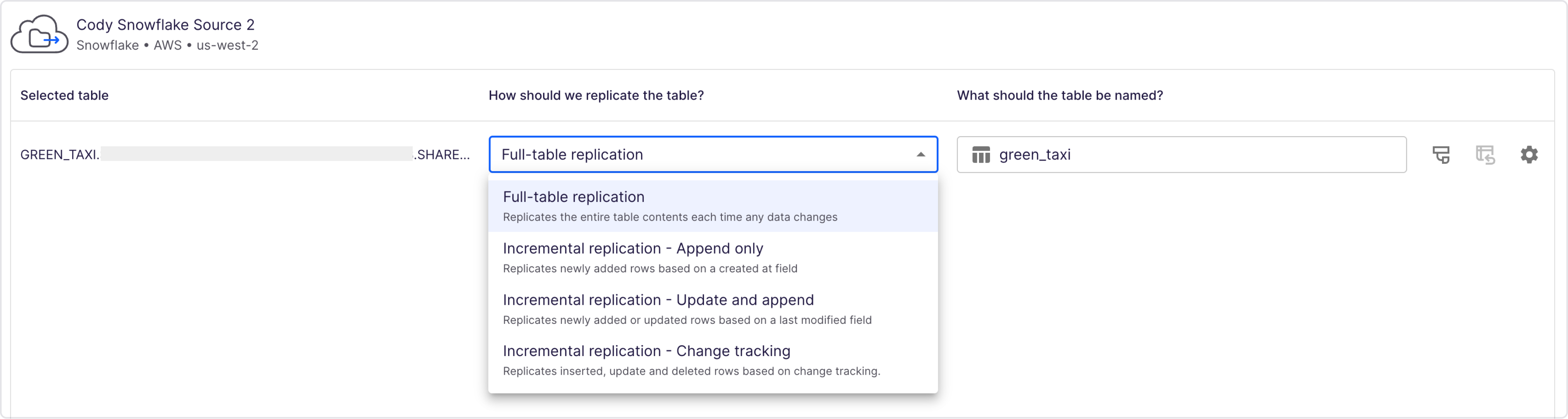

There are three different ways to replicate tables from a data warehouse to another data warehouse. The selection depends on your ELT/ELT approach in your data warehouse as well as the scale of the data.

Full-table replication

Using the full-table replication method, Bobsled will extract the full table from the source data warehouse and replace the contents in the data warehouse destination.

This pattern is well-suited for:

Tables where the ETL overwrites much of the data each pipe runs, or where no timestamps are maintained to understand which records have changed and,

Small tables where the contents don't change too often.

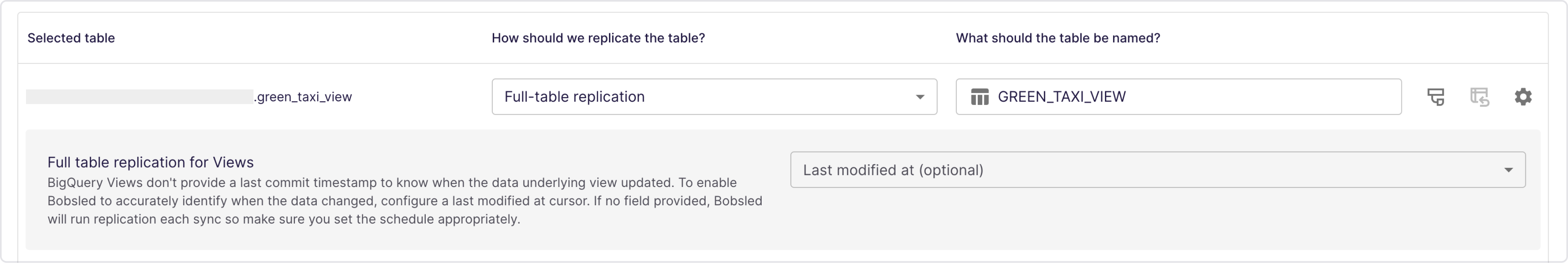

Cursor to control full-table replication on views in BigQuery

It is recommended to use a 'last_modified_at' field to help manage data copying from Google BigQuery views. This acts as a bookmark for the last time the data was changed.

To set this up, make a new source share in the BigQuery UI, and choose the necessary column as the last_modified_at bookmark in the replication settings. Bobsled will use the maximum value in the last_modified_at field and see if that has increased since the last sync.

The system will automatically update full-table replication views every 24 hours if a last_modified_at column is not specified.

NOTE:

This only works for views, not tables.

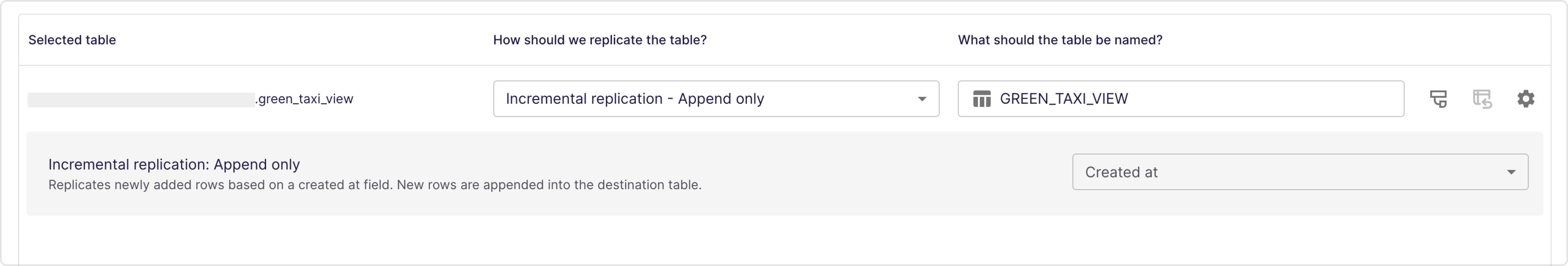

Incremental replication - Append only

Using the incremental replication - append only method, Bobsled will identify new records based on a created at field in the table. This field is used as a bookmark, and each time Bobsled runs new rows that have a higher value of that field will be identified and transferred to the destination.

This pattern is well-suited for:

Cases where the rows in the table are immutable records and old records are not updated (e.g. event data or logs).

You will be required to provide Bobsled with one value in your dataset:

Created at: A key or composite key that Bobsled will use to identify new records. If your dataset does not track when new records are created, consider using a different loading pattern.

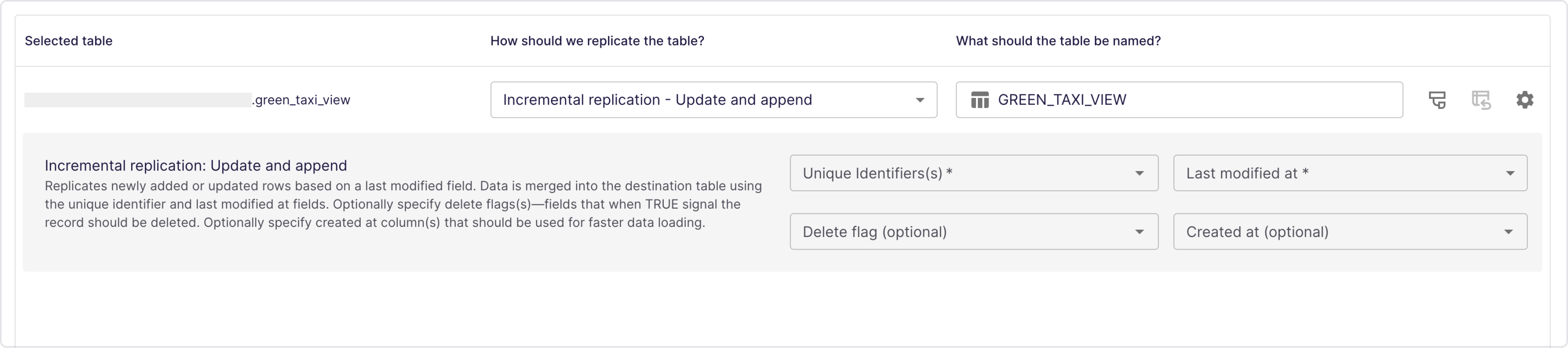

Incremental replication - Update and append

Using the incremental replication - Update and append, Bobsled will identify newly updated records based on a updated at field in the table. Bobsled will then replicate the newly added or updated records to the destination warehouse and ensure that only the latest version of the record, identified by unique identifier, is present in the destination table.

This method is well-suited for:

Datasets where records evolve or move through various states such as e-commerce orders where the status can change. In each automation, all new data is promoted transactionally regardless of whether the records were newly appended or updated.

You will be required to provide Bobsled with two values in your dataset: a unique identifier(s) and a last modified at field.

Unique identifier(s): A key or composite key that should be unique and distinctive for each record that will be updated.Last modified at: A key that Bobsled will use to identify the most recent version of each record. If your dataset does not have a last modified value for Bobsled to identify, consider using a different loading pattern.Delete flag (optional): A key that Bobsled will use to determine if a given row should be deleted from the destination table. Bobsled will make sure any column marked for deletion is not added and remove any row with the same unique identifier for new records marked for deletion.Created at (optional): A key that Bobsled will use to filter down theMERGEstatement run in the destination data warehouse to improve the performance of the load by pruning files containing historical records. This should be used in cases where the table is large or updates very frequently and when yourcreated_atfield doesn’t change and is not nullable. Nulls in the source data can lead to duplicates. When using the created at key, it is suggested to cluster the table by that field for optimal loading performance and efficiency.

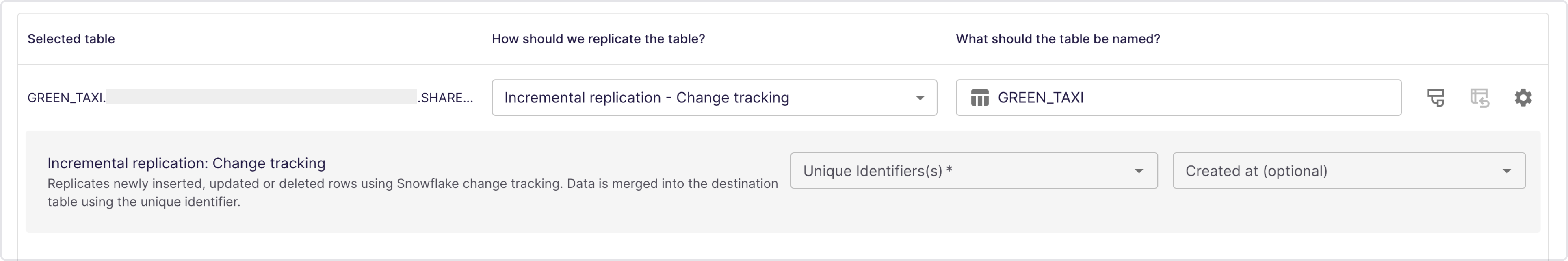

Snowflake source only: Row based replication - Change tracking

Bobsled will identify newly updated records by querying the Snowflake change stream to look for newly inserted, updated or deleted rows. This requires change tracking to be turned on for any given table or table(s) referenced within a view.

This method is well-suited for:

Datasets where records evolve or move through various states, such as e-commerce orders, where the status can change. With each automation, all new data is promoted transactionally regardless of whether the records were newly appended or updated.

This method should be used if any rows are deleted from your source table, and you need to make sure they are deleted downstream.

You will be required to provide Bobsled with one value in your dataset: a unique identifier(s).

Unique identifier(s):A key or composite key that should be unique and distinctive for each record that will be updated.Created at (optional): An optional key that Bobsled will use to filter down the MERGE statement run in the destination data warehouse to improve the performance of the load by pruning files containing historical records. This should be used in cases where the table is large or updates very frequently and when your created_at field doesn’t change and is not nullable. When using the created at key, it is suggested to cluster the table by that field for optimal loading performance.

When change tracking is enabled on views, it is recommended that you always add a column alias for each column in the SELECT statement. This improves Snowflake’s ability to parse and relay the schema properly.

NOTE:

If a share is paused for longer than the data retention window, a backfill will be automatically kicked off. As such, Bobsled recommends increasing the table time travel window on tables that are used in change tracking replication pattern to enabling pausing—when required.

Backfilling tables

Bobsled enables manually triggering a backfill of a table when you need to restate the table. On the next scheduled sync, Bobsled will extract the full table and transactionally replace the existing table in the destination.

Backfills can be manually requested in the UI. Bobsled will also automatically trigger a backfill when required in certain cases (e.g,. replication pattern change).